diff --git a/.github/workflows/build.yaml b/.github/workflows/build.yaml

index 1ecbbb80..c20dc212 100644

--- a/.github/workflows/build.yaml

+++ b/.github/workflows/build.yaml

@@ -9,6 +9,8 @@ jobs:

steps:

- uses: actions/checkout@v2

+ with:

+ submodules: recursive

- name: Cache PlatformIO

uses: actions/cache@v2

diff --git a/.gitmodules b/.gitmodules

new file mode 100644

index 00000000..6c7c1d4f

--- /dev/null

+++ b/.gitmodules

@@ -0,0 +1,9 @@

+[submodule "code/components/esp32-camera-master"]

+ path = code/components/esp32-camera-master

+ url = https://github.com/espressif/esp32-camera.git

+[submodule "code/components/esp-nn"]

+ path = code/components/esp-nn

+ url = https://github.com/espressif/esp-nn.git

+[submodule "code/components/tflite-micro-esp-examples"]

+ path = code/components/tflite-micro-esp-examples

+ url = https://github.com/espressif/tflite-micro-esp-examples.git

diff --git a/README.md b/README.md

index 990e8606..d9464715 100644

--- a/README.md

+++ b/README.md

@@ -52,6 +52,9 @@ A 3d-printable housing can be found here:

- https://www.thingiverse.com/thing:5028229 (Power Meter)

- https://www.thingiverse.com/thing:4571627 (ESP32-Cam housing only)

+## Build it yourself

+See [Build Instructions](code/README.md).

+

## Donate

If you would like to support the developer with a cup of coffee you can do that via [Paypal](https://www.paypal.com/donate?hosted_button_id=8TRSVYNYKDSWL).

diff --git a/code/CMakeLists.txt b/code/CMakeLists.txt

index c0852a05..880cc5fc 100644

--- a/code/CMakeLists.txt

+++ b/code/CMakeLists.txt

@@ -1,6 +1,6 @@

cmake_minimum_required(VERSION 3.13.4)

-list(APPEND EXTRA_COMPONENT_DIRS $ENV{IDF_PATH}/examples/common_components/protocol_examples_common)

+list(APPEND EXTRA_COMPONENT_DIRS $ENV{IDF_PATH}/examples/common_components/protocol_examples_common components/tflite-micro-esp-examples/components/tflite-lib)

ADD_CUSTOM_COMMAND(

OUTPUT ${CMAKE_CURRENT_BINARY_DIR}/version.cpp

diff --git a/code/README.md b/code/README.md

new file mode 100644

index 00000000..63483297

--- /dev/null

+++ b/code/README.md

@@ -0,0 +1,64 @@

+# Build

+

+## Preparations

+```

+git clone https://github.com/jomjol/AI-on-the-edge-device.git

+cd AI-on-the-edge-device

+git checkout rolling

+git submodule update --init

+```

+

+## Build and Flash within terminal

+See further down to build it within an IDE.

+### Compile

+```

+cd code

+platformio run --environment esp32cam

+```

+

+### Upload

+```

+pio run --target upload

+```

+

+If it doesnt find the device:

+1. make sure it is in bootloader mode

+1. set the UART device correctly: In `platformio.ini`, set `upload_port` correctly, eg. `upload_port = /dev/ttyUSB0`

+

+### Monitor UART Log

+```

+pio device monitor

+```

+

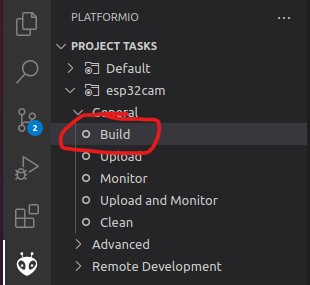

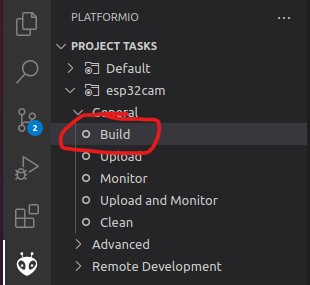

+## Build and Flash with Visual Code IDE

+

+- Download and install VS Code

+ - https://code.visualstudio.com/Download

+- Install the VS Code platform io plugin

+ -  + - Check for error messages, maybe you need to manually add some python libraries

+ - e.g. in my Ubuntu a python3-env was missing: `sudo apt-get install python3-venv`

+- git clone this project

+ - in Linux:

+

+ ```

+ git clone https://github.com/jomjol/AI-on-the-edge-device.git

+ cd AI-on-the-edge-device

+ git checkout rolling

+ git submodule update --init

+ ```

+

+- in VS code, open the `AI-on-the-edge-device/code`

+ - from terminal: `cd AI-on-the-edge-device/code && code .`

+- open a pio terminal (click on the terminal sign in the bottom menu bar)

+- make sure you are in the `code` directory

+- To build, type `platformio run --environment esp32cam`

+ - or use the graphical interface:

+

+ - Check for error messages, maybe you need to manually add some python libraries

+ - e.g. in my Ubuntu a python3-env was missing: `sudo apt-get install python3-venv`

+- git clone this project

+ - in Linux:

+

+ ```

+ git clone https://github.com/jomjol/AI-on-the-edge-device.git

+ cd AI-on-the-edge-device

+ git checkout rolling

+ git submodule update --init

+ ```

+

+- in VS code, open the `AI-on-the-edge-device/code`

+ - from terminal: `cd AI-on-the-edge-device/code && code .`

+- open a pio terminal (click on the terminal sign in the bottom menu bar)

+- make sure you are in the `code` directory

+- To build, type `platformio run --environment esp32cam`

+ - or use the graphical interface:

+  + - the build artifacts are stored in `code/.pio/build/esp32cam/`

+- Connect the device and type `pio device monitor`. There you will see your device and can copy the name to the next instruction

+- Add `upload_port = you_device_port` to the `platformio.ini` file

+- make sure an sd card with the contents of the `sd_card` folder is inserted and you have changed the wifi details

+- `pio run --target erase` to erase the flash

+- `pio run --target upload` this will upload the `bootloader.bin, partitions.bin,firmware.bin` from the `code/.pio/build/esp32cam/` folder.

+- `pio device monitor` to observe the logs via uart

diff --git a/code/components/esp-nn b/code/components/esp-nn

new file mode 160000

index 00000000..6b3ef8e2

--- /dev/null

+++ b/code/components/esp-nn

@@ -0,0 +1 @@

+Subproject commit 6b3ef8e226a05554a6d874f6456f5ca1771c01c2

diff --git a/code/components/esp-nn/.gitignore b/code/components/esp-nn/.gitignore

deleted file mode 100644

index 08ca72b5..00000000

--- a/code/components/esp-nn/.gitignore

+++ /dev/null

@@ -1,57 +0,0 @@

-.config

-*.o

-*.i

-*.s

-*.orig

-*.pyc

-

-# gtags

-GTAGS

-GRTAGS

-GPATH

-

-# emacs

-.dir-locals.el

-

-# emacs temp file suffixes

-*~

-.#*

-\#*#

-

-# eclipse setting

-.settings

-

-# MacOS directory files

-.DS_Store

-

-# Example project files

-examples/**/sdkconfig

-examples/**/sdkconfig.old

-examples/**/build

-

-# Test app files

-test_app/build

-test_app/sdkconfig

-test_app/sdkconfig.old

-

-# Doc build artifacts

-docs/_build/

-docs/doxygen-warning-log.txt

-docs/sphinx-warning-log.txt

-docs/sphinx-warning-log-sanitized.txt

-docs/xml/

-docs/xml_in/

-docs/man/

-docs/doxygen_sqlite3.db

-

-TEST_LOGS

-

-

-# gcov coverage reports

-*.gcda

-*.gcno

-coverage.info

-coverage_report/

-

-# VS Code Settings

-.vscode/

diff --git a/code/components/esp-nn/.gitlab-ci.yml b/code/components/esp-nn/.gitlab-ci.yml

deleted file mode 100644

index 6b540bda..00000000

--- a/code/components/esp-nn/.gitlab-ci.yml

+++ /dev/null

@@ -1,55 +0,0 @@

-stages:

- - build

-

-variables:

- BATCH_BUILD: "1"

- V: "0"

- MAKEFLAGS: "-j8 --no-keep-going"

- IDF_PATH: "$CI_PROJECT_DIR/esp-idf"

- LOG_PATH: "$CI_PROJECT_DIR"

-

-.set_git_config: &set_git_config

- # Set git config

- - git config user.email "test@espressif.com"

- - git config user.name "Espressif"

-

-.add_ssh_key: &add_ssh_key

- # Add gitlab ssh key

- - mkdir -p ~/.ssh

- - chmod 700 ~/.ssh

- - echo -n $GITLAB_KEY > ~/.ssh/id_rsa_base64

- - base64 --decode --ignore-garbage ~/.ssh/id_rsa_base64 > ~/.ssh/id_rsa

- - chmod 600 ~/.ssh/id_rsa

- - echo -e "Host gitlab.espressif.cn\n\tStrictHostKeyChecking no\n" >> ~/.ssh/config

-

-before_script:

- # Add gitlab ssh key

- - *add_ssh_key

- # Set git config

- - *set_git_config

-

-.build_esp32s3: &build_esp32s3

- - idf.py set-target esp32s3 build

-

-.build_esp32: &build_esp32

- - idf.py set-target esp32 build

-

-build_demo:

- stage: build

- image: $CI_DOCKER_REGISTRY/esp32-ci-env:esp-nn

- tags:

- - build

- script:

- # Clone IDF

- - git clone --recursive --single-branch -b release/v4.4 --reference-if-able /local_references/gitlab/ https://gitlab-ci-token:${BOT_TOKEN}@gitlab.espressif.cn:6688/espressif/esp-idf.git

- - cd esp-idf

- - ./install.sh

- - . ./export.sh

- - cd ..

- # Build examples now

- - cd test_app

- # Build esp32s3

- - *build_esp32s3

- # Build esp32

- - *build_esp32

- - cd -

diff --git a/code/components/esp-nn/CMakeLists.txt b/code/components/esp-nn/CMakeLists.txt

deleted file mode 100644

index ba45866a..00000000

--- a/code/components/esp-nn/CMakeLists.txt

+++ /dev/null

@@ -1,50 +0,0 @@

-idf_build_get_property(idf_target IDF_TARGET)

-

-set(c_srcs

- "src/activation_functions/esp_nn_relu_ansi.c"

- "src/basic_math/esp_nn_add_ansi.c"

- "src/basic_math/esp_nn_mul_ansi.c"

- "src/convolution/esp_nn_conv_ansi.c"

- "src/convolution/esp_nn_conv_opt.c"

- "src/convolution/esp_nn_depthwise_conv_ansi.c"

- "src/convolution/esp_nn_depthwise_conv_opt.c"

- "src/fully_connected/esp_nn_fully_connected_ansi.c"

- "src/softmax/esp_nn_softmax_ansi.c"

- "src/softmax/esp_nn_softmax_opt.c"

- "src/pooling/esp_nn_avg_pool_ansi.c"

- "src/pooling/esp_nn_max_pool_ansi.c")

-

-if(CONFIG_IDF_TARGET_ESP32S3)

- set(s3_srcs

- "src/common/esp_nn_common_functions_esp32s3.S"

- "src/common/esp_nn_multiply_by_quantized_mult_esp32s3.S"

- "src/common/esp_nn_multiply_by_quantized_mult_ver1_esp32s3.S"

- "src/activation_functions/esp_nn_relu_s8_esp32s3.S"

- "src/basic_math/esp_nn_add_s8_esp32s3.S"

- "src/basic_math/esp_nn_mul_s8_esp32s3.S"

- "src/convolution/esp_nn_conv_esp32s3.c"

- "src/convolution/esp_nn_depthwise_conv_s8_esp32s3.c"

- "src/convolution/esp_nn_conv_s16_mult8_esp32s3.S"

- "src/convolution/esp_nn_conv_s8_mult8_1x1_esp32s3.S"

- "src/convolution/esp_nn_conv_s16_mult4_1x1_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s8_mult1_3x3_padded_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult1_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult1_3x3_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult1_3x3_no_pad_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult8_3x3_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult4_esp32s3.S"

- "src/convolution/esp_nn_depthwise_conv_s16_mult8_esp32s3.S"

- "src/fully_connected/esp_nn_fully_connected_s8_esp32s3.S"

- "src/pooling/esp_nn_max_pool_s8_esp32s3.S"

- "src/pooling/esp_nn_avg_pool_s8_esp32s3.S")

-endif()

-

-idf_component_register(SRCS "${c_srcs}"

- "${s3_srcs}"

- INCLUDE_DIRS "include" "src/common")

-

-if(CONFIG_IDF_TARGET_ESP32S3)

- target_compile_options(${COMPONENT_LIB} PRIVATE -mlongcalls -fno-unroll-loops -O2 -Wno-unused-function)

-else()

- target_compile_options(${COMPONENT_LIB} PRIVATE -Wno-unused-function)

-endif()

\ No newline at end of file

diff --git a/code/components/esp-nn/Kconfig.projbuild b/code/components/esp-nn/Kconfig.projbuild

deleted file mode 100644

index a146305b..00000000

--- a/code/components/esp-nn/Kconfig.projbuild

+++ /dev/null

@@ -1,29 +0,0 @@

-menu "ESP-NN"

-

-choice NN_OPTIMIZATIONS

- bool "Optimization for nn functions"

- default NN_OPTIMIZED

- help

- Use ANSI-C versions for verification and debug purpose.

- Optimisations are automatically picked up for a chipset.

- For ESP32-S3, assembly optimisations are selected.

- For other platforms(viz., ESP32, ESP32-C3), generic optimisations are used.

-

-config NN_ANSI_C

- bool "ANSI C"

- help

- ANSI C versions for verification and debug purposes.

-config NN_OPTIMIZED

- bool "Optimized versions"

- help

- Optimisations are automatically picked up for a chipset.

- For ESP32-S3, assembly optimisations are selected.

- For other platforms(viz., ESP32, ESP32-C3), generic optimisations are used.

-endchoice

-

-config NN_OPTIMIZATIONS

- int

- default 0 if NN_ANSI_C

- default 1 if NN_OPTIMIZED

-

-endmenu

diff --git a/code/components/esp-nn/LICENSE b/code/components/esp-nn/LICENSE

deleted file mode 100644

index d6456956..00000000

--- a/code/components/esp-nn/LICENSE

+++ /dev/null

@@ -1,202 +0,0 @@

-

- Apache License

- Version 2.0, January 2004

- http://www.apache.org/licenses/

-

- TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

-

- 1. Definitions.

-

- "License" shall mean the terms and conditions for use, reproduction,

- and distribution as defined by Sections 1 through 9 of this document.

-

- "Licensor" shall mean the copyright owner or entity authorized by

- the copyright owner that is granting the License.

-

- "Legal Entity" shall mean the union of the acting entity and all

- other entities that control, are controlled by, or are under common

- control with that entity. For the purposes of this definition,

- "control" means (i) the power, direct or indirect, to cause the

- direction or management of such entity, whether by contract or

- otherwise, or (ii) ownership of fifty percent (50%) or more of the

- outstanding shares, or (iii) beneficial ownership of such entity.

-

- "You" (or "Your") shall mean an individual or Legal Entity

- exercising permissions granted by this License.

-

- "Source" form shall mean the preferred form for making modifications,

- including but not limited to software source code, documentation

- source, and configuration files.

-

- "Object" form shall mean any form resulting from mechanical

- transformation or translation of a Source form, including but

- not limited to compiled object code, generated documentation,

- and conversions to other media types.

-

- "Work" shall mean the work of authorship, whether in Source or

- Object form, made available under the License, as indicated by a

- copyright notice that is included in or attached to the work

- (an example is provided in the Appendix below).

-

- "Derivative Works" shall mean any work, whether in Source or Object

- form, that is based on (or derived from) the Work and for which the

- editorial revisions, annotations, elaborations, or other modifications

- represent, as a whole, an original work of authorship. For the purposes

- of this License, Derivative Works shall not include works that remain

- separable from, or merely link (or bind by name) to the interfaces of,

- the Work and Derivative Works thereof.

-

- "Contribution" shall mean any work of authorship, including

- the original version of the Work and any modifications or additions

- to that Work or Derivative Works thereof, that is intentionally

- submitted to Licensor for inclusion in the Work by the copyright owner

- or by an individual or Legal Entity authorized to submit on behalf of

- the copyright owner. For the purposes of this definition, "submitted"

- means any form of electronic, verbal, or written communication sent

- to the Licensor or its representatives, including but not limited to

- communication on electronic mailing lists, source code control systems,

- and issue tracking systems that are managed by, or on behalf of, the

- Licensor for the purpose of discussing and improving the Work, but

- excluding communication that is conspicuously marked or otherwise

- designated in writing by the copyright owner as "Not a Contribution."

-

- "Contributor" shall mean Licensor and any individual or Legal Entity

- on behalf of whom a Contribution has been received by Licensor and

- subsequently incorporated within the Work.

-

- 2. Grant of Copyright License. Subject to the terms and conditions of

- this License, each Contributor hereby grants to You a perpetual,

- worldwide, non-exclusive, no-charge, royalty-free, irrevocable

- copyright license to reproduce, prepare Derivative Works of,

- publicly display, publicly perform, sublicense, and distribute the

- Work and such Derivative Works in Source or Object form.

-

- 3. Grant of Patent License. Subject to the terms and conditions of

- this License, each Contributor hereby grants to You a perpetual,

- worldwide, non-exclusive, no-charge, royalty-free, irrevocable

- (except as stated in this section) patent license to make, have made,

- use, offer to sell, sell, import, and otherwise transfer the Work,

- where such license applies only to those patent claims licensable

- by such Contributor that are necessarily infringed by their

- Contribution(s) alone or by combination of their Contribution(s)

- with the Work to which such Contribution(s) was submitted. If You

- institute patent litigation against any entity (including a

- cross-claim or counterclaim in a lawsuit) alleging that the Work

- or a Contribution incorporated within the Work constitutes direct

- or contributory patent infringement, then any patent licenses

- granted to You under this License for that Work shall terminate

- as of the date such litigation is filed.

-

- 4. Redistribution. You may reproduce and distribute copies of the

- Work or Derivative Works thereof in any medium, with or without

- modifications, and in Source or Object form, provided that You

- meet the following conditions:

-

- (a) You must give any other recipients of the Work or

- Derivative Works a copy of this License; and

-

- (b) You must cause any modified files to carry prominent notices

- stating that You changed the files; and

-

- (c) You must retain, in the Source form of any Derivative Works

- that You distribute, all copyright, patent, trademark, and

- attribution notices from the Source form of the Work,

- excluding those notices that do not pertain to any part of

- the Derivative Works; and

-

- (d) If the Work includes a "NOTICE" text file as part of its

- distribution, then any Derivative Works that You distribute must

- include a readable copy of the attribution notices contained

- within such NOTICE file, excluding those notices that do not

- pertain to any part of the Derivative Works, in at least one

- of the following places: within a NOTICE text file distributed

- as part of the Derivative Works; within the Source form or

- documentation, if provided along with the Derivative Works; or,

- within a display generated by the Derivative Works, if and

- wherever such third-party notices normally appear. The contents

- of the NOTICE file are for informational purposes only and

- do not modify the License. You may add Your own attribution

- notices within Derivative Works that You distribute, alongside

- or as an addendum to the NOTICE text from the Work, provided

- that such additional attribution notices cannot be construed

- as modifying the License.

-

- You may add Your own copyright statement to Your modifications and

- may provide additional or different license terms and conditions

- for use, reproduction, or distribution of Your modifications, or

- for any such Derivative Works as a whole, provided Your use,

- reproduction, and distribution of the Work otherwise complies with

- the conditions stated in this License.

-

- 5. Submission of Contributions. Unless You explicitly state otherwise,

- any Contribution intentionally submitted for inclusion in the Work

- by You to the Licensor shall be under the terms and conditions of

- this License, without any additional terms or conditions.

- Notwithstanding the above, nothing herein shall supersede or modify

- the terms of any separate license agreement you may have executed

- with Licensor regarding such Contributions.

-

- 6. Trademarks. This License does not grant permission to use the trade

- names, trademarks, service marks, or product names of the Licensor,

- except as required for reasonable and customary use in describing the

- origin of the Work and reproducing the content of the NOTICE file.

-

- 7. Disclaimer of Warranty. Unless required by applicable law or

- agreed to in writing, Licensor provides the Work (and each

- Contributor provides its Contributions) on an "AS IS" BASIS,

- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

- implied, including, without limitation, any warranties or conditions

- of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

- PARTICULAR PURPOSE. You are solely responsible for determining the

- appropriateness of using or redistributing the Work and assume any

- risks associated with Your exercise of permissions under this License.

-

- 8. Limitation of Liability. In no event and under no legal theory,

- whether in tort (including negligence), contract, or otherwise,

- unless required by applicable law (such as deliberate and grossly

- negligent acts) or agreed to in writing, shall any Contributor be

- liable to You for damages, including any direct, indirect, special,

- incidental, or consequential damages of any character arising as a

- result of this License or out of the use or inability to use the

- Work (including but not limited to damages for loss of goodwill,

- work stoppage, computer failure or malfunction, or any and all

- other commercial damages or losses), even if such Contributor

- has been advised of the possibility of such damages.

-

- 9. Accepting Warranty or Additional Liability. While redistributing

- the Work or Derivative Works thereof, You may choose to offer,

- and charge a fee for, acceptance of support, warranty, indemnity,

- or other liability obligations and/or rights consistent with this

- License. However, in accepting such obligations, You may act only

- on Your own behalf and on Your sole responsibility, not on behalf

- of any other Contributor, and only if You agree to indemnify,

- defend, and hold each Contributor harmless for any liability

- incurred by, or claims asserted against, such Contributor by reason

- of your accepting any such warranty or additional liability.

-

- END OF TERMS AND CONDITIONS

-

- APPENDIX: How to apply the Apache License to your work.

-

- To apply the Apache License to your work, attach the following

- boilerplate notice, with the fields enclosed by brackets "[]"

- replaced with your own identifying information. (Don't include

- the brackets!) The text should be enclosed in the appropriate

- comment syntax for the file format. We also recommend that a

- file or class name and description of purpose be included on the

- same "printed page" as the copyright notice for easier

- identification within third-party archives.

-

- Copyright [yyyy] [name of copyright owner]

-

- Licensed under the Apache License, Version 2.0 (the "License");

- you may not use this file except in compliance with the License.

- You may obtain a copy of the License at

-

- http://www.apache.org/licenses/LICENSE-2.0

-

- Unless required by applicable law or agreed to in writing, software

- distributed under the License is distributed on an "AS IS" BASIS,

- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

- See the License for the specific language governing permissions and

- limitations under the License.

diff --git a/code/components/esp-nn/README.md b/code/components/esp-nn/README.md

deleted file mode 100644

index f70f4074..00000000

--- a/code/components/esp-nn/README.md

+++ /dev/null

@@ -1,55 +0,0 @@

-# ESP-NN

-

-The library contains optimised NN (Neural Network) functions for various Espressif chipsets.

-

-* Supported platforms:

- * TensorFlow Lite Micro (TFLite Micro). Repo can be found [here](https://github.com/espressif/tflite-micro-esp-examples)

-

-* Supported ESP chipsets include:

- * ESP32-S3 (Assembly versions optimised to benefit from vector instructions of ESP32-S3)

- * ESP32 (Generic optimisations)

- * ESP32-C3 (Generic optimisations)

-

-## Performance

-

-### Kernelwise performance for s8 versions:

-

- * Kernelwise performance on ESP32-S3 chip

- * Numbers are ticks taken for kernel to execute

- * Chip config: 240MHz, SPI: QPI 80MHz, Data cache: 64KB

-

- | Function | ANSI C | ESP32-S3 Opt | Opt Ratio | Data info | Memory |

- | ----------------| --------|---------|---------|-------------|-----------|

- | elementwise_add | 320397 | 87119 | 3.68 | size = 1615 | External |

- | elementwise_mul | 125958 | 44239 | 2.85 | size = 1615 | External |

- | convolution | 4663012 | 428675 | 10.88 | input(10,10), filter(64x1x1x64) | External |

- | convolution | 301014 | 32433 | 9.28 | input(8,8), filter(16x1x1x16) | External |

- | convolution | 2115418 | 1020923 | 2.07 | input(10,10), filter(64x3x3x3) | External |

- | depthwise conv | 1190062 | 203278 | 5.85 | input (18, 18), pad(0,0), stride(1,1) filter: 1x3x3x16 | External |

- | depthwise conv | 837072 | 182335 | 4.59 | input (12, 12), pad(1,1), stride(1,1) filter: 8x5x5x4 | External |

- | max pool | 485714 | 76747 | 6.33 | input(16,16), filter (1x3x3x16) | Internal |

- | avg pool | 541462 | 160580 | 3.37 | input(16,16), filter (1x3x3x16) | Internal |

- | fully connected | 15853 | 9547 | 1.66 | len: 265, ch = 3 | Internal |

- | prelu (relu6) | 19472 | 2734 | 7.12 | size, 1615 | Internal |

-

-

-## Configuration

-

- * To configure, please use `idf.py menuconfig` and under `ESP-NN` select `NN_OPTIMIZATIONS`

- * There are two options presented:

- * Optimized versions

- * ANSI C

-

- * Default selection is for `Optimized versions`. For ESP32-S3, assembly versions are automatically selected, whereas for other chipsets (viz., ESP32, ESP32-C3), generic optimisations are selected.

- * For debugging purposes, you may want to select `ANSI C` reference versions.

-

-

-## Contributing

-

-If you encounter an issue with ESP-NN, or wish to submit a feature request, please use the Issues section on the Github.

-

-For general questions related to this library, please use the esp32.com forum.

-

-## Copyrights and License

-

-All original source code in this repository is Copyright (C) 2020-2021 Espressif Systems. This source code is licensed under the Apache License 2.0 as described in the file LICENSE.

diff --git a/code/components/esp-nn/include/esp_nn.h b/code/components/esp-nn/include/esp_nn.h

deleted file mode 100644

index bd533119..00000000

--- a/code/components/esp-nn/include/esp_nn.h

+++ /dev/null

@@ -1,46 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#pragma once

-

-#if defined(CONFIG_NN_OPTIMIZED)

-// select apt optimisations

-#ifdef CONFIG_IDF_TARGET_ESP32S3

-#define ARCH_ESP32_S3 1

-#endif

-#ifdef CONFIG_IDF_TARGET_ESP32

-#define ARCH_ESP32 1

-#endif

-#endif

-

-#ifdef __cplusplus

-extern "C" {

-#endif

-

-/* reference kernels included by default */

-#include "esp_nn_ansi_headers.h"

-

-#if defined(CONFIG_NN_OPTIMIZED)

-#if defined(ARCH_ESP32_S3)

-#include "esp_nn_esp32s3.h"

-#else // for other platforms use generic optimisations

-#include "esp_nn_generic_opt.h"

-#endif // #if defined(ARCH_ESP32_S3)

-#else

-#include "esp_nn_ansi_c.h"

-#endif

-

-#ifdef __cplusplus

-}

-#endif

diff --git a/code/components/esp-nn/include/esp_nn_ansi_c.h b/code/components/esp-nn/include/esp_nn_ansi_c.h

deleted file mode 100644

index 8279ebef..00000000

--- a/code/components/esp-nn/include/esp_nn_ansi_c.h

+++ /dev/null

@@ -1,47 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-/**

- * @file Header definitions to include for ANSI C versions.

- * These are just typedefs to pick up ANSI versions.

- */

-

-#pragma once

-

-#include "esp_nn_defs.h"

-#include "esp_nn_ansi_headers.h"

-

-#define esp_nn_add_elementwise_s8 esp_nn_add_elementwise_s8_ansi

-#define esp_nn_mul_elementwise_s8 esp_nn_mul_elementwise_s8_ansi

-

-#define esp_nn_depthwise_conv_s8 esp_nn_depthwise_conv_s8_ansi

-

-#define esp_nn_conv_s8 esp_nn_conv_s8_ansi

-

-#define esp_nn_get_conv_scratch_size esp_nn_get_conv_scratch_size_ansi

-#define esp_nn_set_conv_scratch_buf esp_nn_set_conv_scratch_buf_ansi

-

-#define esp_nn_get_depthwise_conv_scratch_size esp_nn_get_depthwise_conv_scratch_size_ansi

-#define esp_nn_set_depthwise_conv_scratch_buf esp_nn_set_depthwise_conv_scratch_buf_ansi

-

-#define esp_nn_relu6_s8 esp_nn_relu6_s8_ansi

-

-#define esp_nn_avg_pool_s8 esp_nn_avg_pool_s8_ansi

-#define esp_nn_max_pool_s8 esp_nn_max_pool_s8_ansi

-

-#define esp_nn_fully_connected_s8 esp_nn_fully_connected_s8_ansi

-

-#define esp_nn_get_softmax_scratch_size esp_nn_get_softmax_scratch_size_ansi

-#define esp_nn_set_softmax_scratch_buf esp_nn_set_softmax_scratch_buf_ansi

-#define esp_nn_softmax_s8 esp_nn_softmax_s8_ansi

diff --git a/code/components/esp-nn/include/esp_nn_ansi_headers.h b/code/components/esp-nn/include/esp_nn_ansi_headers.h

deleted file mode 100644

index 52ebb680..00000000

--- a/code/components/esp-nn/include/esp_nn_ansi_headers.h

+++ /dev/null

@@ -1,309 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#pragma once

-

-/**

- * @file Header definitions to include for esp_nn reference functions

- */

-

-#include "esp_nn_defs.h"

-/************************** Basic math functions ****************************/

-

-/**

- * @brief elementwise addition

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- *

- * shift values are expected to be <= 0

- */

-void esp_nn_add_elementwise_s8_ansi(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- const int32_t input1_mult,

- const int32_t input2_mult,

- const int32_t input1_shift,

- const int32_t input2_shift,

- const int32_t left_shift,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size);

-/**

- * @brief elementwise multiplication

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- *

- * output shift is expected to be <= 0

- */

-void esp_nn_mul_elementwise_s8_ansi(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size);

-

-

-/************************** Convolution functions *****************************/

-

-/**

- * @brief depthwise convolution per channel

- *

- * @note inputs type: int8_t, output: int8_t

- * Version used in tflite is per channel.

- * This version follows the same footsprints.

- * Meaning, it has per out_channel shift and multiplier for

- * requantization

- *

- * optimization notes: Though input_offset is int32 type,

- * offset values are contained in 8 bits [-128, 127]

- */

-void esp_nn_depthwise_conv_s8_ansi(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *out_data,

- const dw_conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-/**

- * @brief 2d-convolution channelwise

- *

- * @note operation: result += (input + offset) * filter

- *

- * inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_conv_s8_ansi(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *out_data,

- const conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-int esp_nn_get_conv_scratch_size_ansi(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const conv_params_t *conv_params);

-void esp_nn_set_conv_scratch_buf_ansi(const void *buf);

-

-int esp_nn_get_depthwise_conv_scratch_size_ansi(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const dw_conv_params_t *conv_params);

-void esp_nn_set_depthwise_conv_scratch_buf_ansi(const void *buf);

-

-/************************** Activation functions *****************************/

-

-/**

- * @brief relu6

- *

- * @note inout: int8_t

- */

-void esp_nn_relu6_s8_ansi(int8_t *data, uint16_t size);

-

-/************************** Pooling functions *****************************/

-

-

-/**

- * @brief max_pool

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_max_pool_s8_ansi(const int8_t *input,

- const uint16_t input_wd,

- const uint16_t input_ht,

- int8_t *output,

- const uint16_t output_wd,

- const uint16_t output_ht,

- const uint16_t stride_wd,

- const uint16_t stride_ht,

- const uint16_t filter_wd,

- const uint16_t filter_ht,

- const uint16_t pad_wd,

- const uint16_t pad_ht,

- const int32_t activation_min,

- const int32_t activation_max,

- const uint16_t channels);

-

-/**

- * @brief avg_pool

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_avg_pool_s8_ansi(const int8_t *input,

- const uint16_t input_wd,

- const uint16_t input_ht,

- int8_t *output,

- const uint16_t output_wd,

- const uint16_t output_ht,

- const uint16_t stride_wd,

- const uint16_t stride_ht,

- const uint16_t filter_wd,

- const uint16_t filter_ht,

- const uint16_t pad_wd,

- const uint16_t pad_ht,

- const int32_t activation_min,

- const int32_t activation_max,

- const uint16_t channels);

-

-

-/************************** Fully connected functions ***********************/

-

-/**

- * @brief fully connected

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_fully_connected_s8_ansi(const int8_t *input_data,

- const int32_t input_offset,

- const uint16_t row_len,

- const int8_t *filter_data,

- const int32_t filter_offset,

- const int32_t *bias,

- int8_t *out_data,

- const uint16_t out_channels,

- const int32_t out_offset,

- const int32_t out_shift,

- const int32_t out_mult,

- const int32_t activation_min,

- const int32_t activation_max);

-

-/**

- * @brief Get scratch buffer size needed by softmax function

- *

- * @param width

- * @param height

- * @return size in bytes

- *

- * @note buffer must be 4 byte aligned

- */

-int32_t esp_nn_get_softmax_scratch_size_ansi(const int32_t width, const int32_t height);

-

-/* ANSI C function to be hooked up when optimised version needed */

-int32_t esp_nn_get_softmax_scratch_size_opt(const int32_t width, const int32_t height);

-

-/**

- * @brief Set scratch buffer to be used by softmax function

- *

- * @param buffer this can be NULL if one needs to unset it

- * must be aligned to 4 bytes

- */

-void esp_nn_set_softmax_scratch_buf_ansi(void *buffer);

-

-/**

- * @brief reference softmax function

- *

- * @note inputs type: int8_t, output: int8_t

- */

-void esp_nn_softmax_s8_ansi(const int8_t *input_data,

- const int32_t height,

- const int32_t width,

- const int32_t mult,

- const int32_t shift,

- const int32_t diff_min,

- int8_t *output_data);

-

-

-//////////////////////////// Generic optimisations /////////////////////////////

-

-/************************** Convolution functions *****************************/

-

-/**

- * @brief 2d-convolution channelwise optimized version

- *

- * @note operation: result += (input + offset) * filter

- *

- * inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_conv_s8_opt(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *out_data,

- const conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-/**

- * @brief depthwise convolution per channel optimized version

- *

- * @note inputs type: int8_t, output: int8_t

- * Version used in tflite is per channel.

- * This version follows the same footsprints.

- * Meaning, it has per out_channel shift and multiplier for

- * requantization

- *

- * optimization notes: Though input_offset is int32 type,

- * offset values are contained in 8 bits [-128, 127]

- */

-void esp_nn_depthwise_conv_s8_opt(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *out_data,

- const dw_conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-int esp_nn_get_conv_scratch_size_opt(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const conv_params_t *conv_params);

-void esp_nn_set_conv_scratch_buf_opt(const void *buf);

-

-int esp_nn_get_depthwise_conv_scratch_size_opt(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const dw_conv_params_t *conv_params);

-void esp_nn_set_depthwise_conv_scratch_buf_opt(const void *buf);

-

-/* ANSI C function to be hooked up when optimised version needed */

-void esp_nn_set_softmax_scratch_buf_opt(void *buffer);

-

-/**

- * @brief optimised version of softmax function

- *

- * @note the function uses extra buffer (4 * width bytes)

- * hence, scratch buffers must be set before calling this.

- */

-void esp_nn_softmax_s8_opt(const int8_t *input_data,

- const int32_t height,

- const int32_t width,

- const int32_t mult,

- const int32_t shift,

- const int32_t diff_min,

- int8_t *output_data);

diff --git a/code/components/esp-nn/include/esp_nn_defs.h b/code/components/esp-nn/include/esp_nn_defs.h

deleted file mode 100644

index 756d8e6f..00000000

--- a/code/components/esp-nn/include/esp_nn_defs.h

+++ /dev/null

@@ -1,83 +0,0 @@

-// Copyright 2022 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#pragma once

-

-#include

-

-/**

- * @brief structure to club data dims

- * this structure can be used for input, output and filter

- */

-typedef struct data_dims {

- int32_t width;

- int32_t height;

- int32_t channels;

-

- int32_t extra; // can be used as batch or any other param

-} data_dims_t;

-

-/**

- * @brief 2d data structure (width, height)

- *

- */

-typedef struct data_2d {

- int32_t width;

- int32_t height;

-} data_2d_t;

-

-/**

- * @brief min/max activation

- */

-typedef struct act_params {

- int32_t min;

- int32_t max;

-} act_params_t;

-

-/**

- * @brief per channel quant data

- *

- * @note number of shift and mult elements are equal to output channels

- */

-typedef struct quant_data {

- int32_t *shift;

- int32_t *mult;

-} quant_data_t;

-

-/**

- * @brief params specific to convolution 2d

- *

- */

-typedef struct conv_params {

- int32_t in_offset;

- int32_t out_offset;

- data_2d_t stride;

- data_2d_t padding;

- data_2d_t dilation;

- act_params_t activation;

-} conv_params_t;

-

-/**

- * @brief params specific to depthwise convolution 2d

- *

- */

-typedef struct dw_conv_params {

- int32_t in_offset;

- int32_t out_offset;

- int32_t ch_mult; // channel multiplier. (in_ch * ch_mult = out_ch)

- data_2d_t stride;

- data_2d_t padding;

- data_2d_t dilation;

- act_params_t activation;

-} dw_conv_params_t;

diff --git a/code/components/esp-nn/include/esp_nn_esp32s3.h b/code/components/esp-nn/include/esp_nn_esp32s3.h

deleted file mode 100644

index 0f52c943..00000000

--- a/code/components/esp-nn/include/esp_nn_esp32s3.h

+++ /dev/null

@@ -1,231 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-/**

- * @file Header definitions to include for esp_nn optimized functions for

- * the ESP32-S3 platform

- */

-

-#pragma once

-

-#include "esp_nn_defs.h"

-#include "esp_nn_ansi_headers.h"

-

-/************************** Basic math functions *****************************/

-

-

-/**

- * @brief elementwise addition

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- *

- * shift values are expected to be <= 0

- */

-void esp_nn_add_elementwise_s8_esp32s3(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- const int32_t input1_mult,

- const int32_t input2_mult,

- const int32_t input1_shift,

- const int32_t input2_shift,

- const int32_t left_shift,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size);

-

-/**

- * @brief elementwise multiplication

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- *

- * output shift is expected to be <= 0

- */

-void esp_nn_mul_elementwise_s8_esp32s3(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size);

-

-

-/************************** Convolution functions *****************************/

-

-/**

- * @brief depthwise convolution per channel

- *

- * @note inputs type: int8_t, output: int8_t

- * Version used in tflite is per channel.

- * This version follows the same footsprints.

- * Meaning, it has per out_channel shift and multiplier for

- * requantization

- *

- * optimization notes: Though input_offset is int32 type,

- * offset values are contained in 8 bits [-128, 127]

- */

-void esp_nn_depthwise_conv_s8_esp32s3(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *output_data,

- const dw_conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-/**

- * @brief 2d - convolution channelwise

- *

- * @note operation: result += (input + offset) * filter

- *

- * inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_conv_s8_esp32s3(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *output_data,

- const conv_params_t *conv_params,

- const quant_data_t *quant_data);

-

-int esp_nn_get_conv_scratch_size_esp32s3(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const conv_params_t *conv_params);

-void esp_nn_set_conv_scratch_buf_esp32s3(const void *buf);

-

-int esp_nn_get_depthwise_conv_scratch_size_esp32s3(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const dw_conv_params_t *conv_params);

-void esp_nn_set_depthwise_conv_scratch_buf_esp32s3(const void *buf);

-

-/************************** Pooling functions *****************************/

-

-/**

- * @brief max_pool

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_max_pool_s8_esp32s3(const int8_t *input,

- const uint16_t input_wd,

- const uint16_t input_ht,

- int8_t *output,

- const uint16_t output_wd,

- const uint16_t output_ht,

- const uint16_t stride_wd,

- const uint16_t stride_ht,

- const uint16_t filter_wd,

- const uint16_t filter_ht,

- const uint16_t pad_wd,

- const uint16_t pad_ht,

- const int32_t activation_min,

- const int32_t activation_max,

- const uint16_t channels);

-

-/**

- * @brief avg_pool

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- */

-void esp_nn_avg_pool_s8_esp32s3(const int8_t *input,

- const uint16_t input_wd,

- const uint16_t input_ht,

- int8_t *output,

- const uint16_t output_wd,

- const uint16_t output_ht,

- const uint16_t stride_wd,

- const uint16_t stride_ht,

- const uint16_t filter_wd,

- const uint16_t filter_ht,

- const uint16_t pad_wd,

- const uint16_t pad_ht,

- const int32_t activation_min,

- const int32_t activation_max,

- const uint16_t channels);

-

-

-/************************** Fully connected functions *****************************/

-

-/**

- * @brief fully connected

- *

- * @note inputs type: int8_t, output: int8_t

- * input offsets: although int32_t, they are contained in 8 bits [-128, 127]

- *

- * Current version works only on aligned input.

- * row_len and channels should both be multiple of 8.

- */

-void esp_nn_fully_connected_s8_esp32s3(const int8_t *input_data,

- const int32_t input_offset,

- const uint16_t row_len,

- const int8_t *filter_data,

- const int32_t filter_offset,

- const int32_t *bias,

- int8_t *out_data,

- const uint16_t out_channels,

- const int32_t out_offset,

- const int32_t out_shift,

- const int32_t out_mult,

- const int32_t activation_min,

- const int32_t activation_max);

-

-/**

- * @brief relu6

- *

- * @note inout: int8_t

- */

-void esp_nn_relu6_s8_esp32s3(int8_t *data, uint16_t size);

-

-/********************** function defines ***************************/

-

-#define esp_nn_add_elementwise_s8 esp_nn_add_elementwise_s8_esp32s3

-#define esp_nn_mul_elementwise_s8 esp_nn_mul_elementwise_s8_esp32s3

-

-#define esp_nn_depthwise_conv_s8 esp_nn_depthwise_conv_s8_esp32s3

-

-#define esp_nn_get_conv_scratch_size esp_nn_get_conv_scratch_size_esp32s3

-#define esp_nn_set_conv_scratch_buf esp_nn_set_conv_scratch_buf_esp32s3

-

-#define esp_nn_get_depthwise_conv_scratch_size esp_nn_get_depthwise_conv_scratch_size_esp32s3

-#define esp_nn_set_depthwise_conv_scratch_buf esp_nn_set_depthwise_conv_scratch_buf_esp32s3

-

-#define esp_nn_conv_s8 esp_nn_conv_s8_esp32s3

-

-#define esp_nn_relu6_s8 esp_nn_relu6_s8_esp32s3

-

-#define esp_nn_avg_pool_s8 esp_nn_avg_pool_s8_esp32s3

-#define esp_nn_max_pool_s8 esp_nn_max_pool_s8_esp32s3

-

-#define esp_nn_fully_connected_s8 esp_nn_fully_connected_s8_esp32s3

-

-#define esp_nn_get_softmax_scratch_size esp_nn_get_softmax_scratch_size_opt

-#define esp_nn_set_softmax_scratch_buf esp_nn_set_softmax_scratch_buf_opt

-#define esp_nn_softmax_s8 esp_nn_softmax_s8_opt

diff --git a/code/components/esp-nn/include/esp_nn_generic_opt.h b/code/components/esp-nn/include/esp_nn_generic_opt.h

deleted file mode 100644

index 136cba5d..00000000

--- a/code/components/esp-nn/include/esp_nn_generic_opt.h

+++ /dev/null

@@ -1,47 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-/**

- * @file Header definitions to include for esp_nn generic optimisations

- * For functions which not having optimisations, _ansi versions are picked.

- */

-

-#pragma once

-

-#include "esp_nn_defs.h"

-#include "esp_nn_ansi_headers.h"

-

-#define esp_nn_add_elementwise_s8 esp_nn_add_elementwise_s8_ansi

-#define esp_nn_mul_elementwise_s8 esp_nn_mul_elementwise_s8_ansi

-

-#define esp_nn_depthwise_conv_s8 esp_nn_depthwise_conv_s8_opt

-

-#define esp_nn_conv_s8 esp_nn_conv_s8_opt

-

-#define esp_nn_get_conv_scratch_size esp_nn_get_conv_scratch_size_opt

-#define esp_nn_set_conv_scratch_buf esp_nn_set_conv_scratch_buf_opt

-

-#define esp_nn_get_depthwise_conv_scratch_size esp_nn_get_depthwise_conv_scratch_size_opt

-#define esp_nn_set_depthwise_conv_scratch_buf esp_nn_set_depthwise_conv_scratch_buf_opt

-

-#define esp_nn_relu6_s8 esp_nn_relu6_s8_ansi

-

-#define esp_nn_avg_pool_s8 esp_nn_avg_pool_s8_ansi

-#define esp_nn_max_pool_s8 esp_nn_max_pool_s8_ansi

-

-#define esp_nn_fully_connected_s8 esp_nn_fully_connected_s8_ansi

-

-#define esp_nn_get_softmax_scratch_size esp_nn_get_softmax_scratch_size_opt

-#define esp_nn_set_softmax_scratch_buf esp_nn_set_softmax_scratch_buf_opt

-#define esp_nn_softmax_s8 esp_nn_softmax_s8_opt

diff --git a/code/components/esp-nn/src/activation_functions/esp_nn_relu_ansi.c b/code/components/esp-nn/src/activation_functions/esp_nn_relu_ansi.c

deleted file mode 100644

index 1d4c3d11..00000000

--- a/code/components/esp-nn/src/activation_functions/esp_nn_relu_ansi.c

+++ /dev/null

@@ -1,30 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-#include

-

-#include

-

-void esp_nn_relu6_s8_ansi(int8_t *data, uint16_t size)

-{

- int32_t i;

-

- for (i = 0; i < size; i++) {

- int32_t ip = data[i];

-

- ip = max(ip, 0);

- data[i] = min(ip, 6);

- }

-}

diff --git a/code/components/esp-nn/src/basic_math/esp_nn_add_ansi.c b/code/components/esp-nn/src/basic_math/esp_nn_add_ansi.c

deleted file mode 100644

index 617386cf..00000000

--- a/code/components/esp-nn/src/basic_math/esp_nn_add_ansi.c

+++ /dev/null

@@ -1,97 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-

-#include

-

-void esp_nn_add_elementwise_u8_ansi(const uint8_t *input1_data,

- const uint8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- const int32_t input1_mult,

- const int32_t input2_mult,

- const int32_t input1_shift,

- const int32_t input2_shift,

- const int32_t left_shift,

- uint8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size)

-{

- for (int i = 0; i < size; i++) {

- int32_t tmp1 = input1_data[i] + input1_offset;

- int32_t tmp2 = input2_data[i] + input2_offset;

-

- tmp1 <<= left_shift;

- tmp2 <<= left_shift;

-

- tmp1 = esp_nn_sat_round_doubling_high_mul(tmp1, input1_mult);

- tmp2 = esp_nn_sat_round_doubling_high_mul(tmp2, input2_mult);

-

- tmp1 = esp_nn_div_by_power_of_two(tmp1, -input1_shift);

- tmp2 = esp_nn_div_by_power_of_two(tmp2, -input2_shift);

-

- int32_t out = tmp1 + tmp2;

- out = esp_nn_sat_round_doubling_high_mul(out, out_mult);

- out = esp_nn_div_by_power_of_two(out, -out_shift);

- out = out + out_offset;

-

- out = max(activation_min, min(out, activation_max));

- output[i] = (uint8_t) out;

- }

-}

-

-void esp_nn_add_elementwise_s8_ansi(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- const int32_t input1_mult,

- const int32_t input2_mult,

- const int32_t input1_shift,

- const int32_t input2_shift,

- const int32_t left_shift,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size)

-{

- for (int i = 0; i < size; i++) {

- int32_t tmp1 = input1_data[i] + input1_offset;

- int32_t tmp2 = input2_data[i] + input2_offset;

-

- tmp1 <<= left_shift;

- tmp2 <<= left_shift;

-

- tmp1 = esp_nn_sat_round_doubling_high_mul(tmp1, input1_mult);

- tmp2 = esp_nn_sat_round_doubling_high_mul(tmp2, input2_mult);

-

- tmp1 = esp_nn_div_by_power_of_two(tmp1, -input1_shift);

- tmp2 = esp_nn_div_by_power_of_two(tmp2, -input2_shift);

-

- int32_t out = tmp1 + tmp2;

- out = esp_nn_sat_round_doubling_high_mul(out, out_mult);

- out = esp_nn_div_by_power_of_two(out, -out_shift);

- out = out + out_offset;

-

- out = max(activation_min, min(out, activation_max));

- output[i] = (int8_t) out;

- }

-}

diff --git a/code/components/esp-nn/src/basic_math/esp_nn_mul_ansi.c b/code/components/esp-nn/src/basic_math/esp_nn_mul_ansi.c

deleted file mode 100644

index db8e8cc0..00000000

--- a/code/components/esp-nn/src/basic_math/esp_nn_mul_ansi.c

+++ /dev/null

@@ -1,42 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-

-#include

-

-void esp_nn_mul_elementwise_s8_ansi(const int8_t *input1_data,

- const int8_t *input2_data,

- const int32_t input1_offset,

- const int32_t input2_offset,

- int8_t *output,

- const int32_t out_offset,

- const int32_t out_mult,

- const int32_t out_shift,

- const int32_t activation_min,

- const int32_t activation_max,

- const int32_t size)

-{

- for (int i = 0; i < size; i++) {

- int32_t tmp1 = input1_data[i] + input1_offset;

- int32_t tmp2 = input2_data[i] + input2_offset;

-

- int32_t out = tmp1 * tmp2;

- out = esp_nn_multiply_by_quantized_mult(out, out_mult, out_shift);

- out = out + out_offset;

-

- out = max(activation_min, min(out, activation_max));

- output[i] = (int8_t) out;

- }

-}

diff --git a/code/components/esp-nn/src/common/common_functions.h b/code/components/esp-nn/src/common/common_functions.h

deleted file mode 100644

index 0a74eca4..00000000

--- a/code/components/esp-nn/src/common/common_functions.h

+++ /dev/null

@@ -1,255 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#pragma once

-

-#include

-#include

-#include

-

-/**

- * c99 standard still doesn't strictly inline functions

- * We need to use attribute as well to do this.

- */

-#define __NN_FORCE_INLINE__ __attribute((always_inline)) static inline

-

-/* min/max macros */

-#ifndef max

-#define max(a, b) ({ \

- __typeof__ (a) _a = (a); \

- __typeof__ (b) _b = (b); \

- _a > _b ? _a : _b; \

-})

-

-#define min(a, b) ({ \

- __typeof__ (a) _a = (a); \

- __typeof__ (b) _b = (b); \

- _a < _b ? _a : _b; \

-})

-#endif

-

-__NN_FORCE_INLINE__ int32_t esp_nn_clz32(uint32_t in)

-{

-#if CONFIG_IDF_TARGET_ARCH_XTENSA

- __asm__ volatile("nsau %0, %0" : "+r" (in));

- return in;

-#elif defined(__GNUC__)

- return __builtin_clz(in);

-#else

- int32_t count = 32;

- uint32_t x = in, y = in >> 16;

- if (y != 0) {

- count -= 16;

- x = y;

- }

- y = x >> 8;

- if (y != 0) {

- count -= 8;

- x = y;

- }

- y = x >> 4;

- if (y != 0) {

- count -= 4;

- x = y;

- }

- y = x >> 2;

- if (y != 0) {

- count -= 2;

- x = y;

- }

- y = x >> 1;

- if (y != 0) {

- return count - 2;

- }

- return count - x;

-#endif

-}

-

-/**

- * Signed saturate a 32 bit value to 8 bits keeping output in 32 bit variable.

- */

-__NN_FORCE_INLINE__ int32_t esp_nn_saturate8(int32_t in)

-{

-#if CONFIG_IDF_TARGET_ARCH_XTENSA

- __asm__ volatile("clamps %0, %0, 7" : "+a"(in));

- return in;

-#else

- return max(INT8_MIN, min(in, INT8_MAX));

-#endif

-}

-

-__NN_FORCE_INLINE__ int32_t esp_nn_pick_sat_high32_of64(int64_t val64)

-{

- int32_t sign = (int32_t) (val64 >> 63);

- int32_t to_add = sign & ((1ul << 31) - 1);

- return (int32_t) ((int64_t) (val64 + to_add) >> 31);

-}

-

-__NN_FORCE_INLINE__ int32_t esp_nn_sat_round_doubling_high_mul(int32_t in0, int32_t in1)

-{

- int32_t result;

- int64_t in0_64 = (int64_t) in0;

- bool overflow = (in0 == in1) && (in0 == (int32_t) INT32_MIN);

-

- /* Nudge value */

- int64_t nudge_val = 1 << 30;

- if ((in0 < 0) ^ (in1 < 0)) {

- nudge_val = 1 - nudge_val;

- }

-

- /* Multiply and add nudge */

- int64_t mult = in0_64 * in1 + nudge_val;

-

- /* Round and pickup 32 bits */

- result = esp_nn_pick_sat_high32_of64(mult);

-

- return overflow ? INT32_MAX : result;

-}

-

-/**

- * fast version

- * this will fail for values closer to INT32_MAX and INT32_MIN by `1 << (exponent - 1)`.

- * We can afford to do this because we are at the very last stage of filter.

- * Also it is pretty rare condition as our output is going to be 8 bit.

- */

-__NN_FORCE_INLINE__ int32_t esp_nn_div_by_power_of_two_fast(int32_t val, int32_t exponent)

-{

- int32_t to_add = (1 << (exponent - 1)) - (val < 0);

- return (int32_t) ((val + to_add) >> exponent);

-}

-

-__NN_FORCE_INLINE__ int32_t esp_nn_div_by_power_of_two(int32_t val, int32_t exponent)

-{

- int32_t result;

-

- const int32_t mask = (1 << exponent) - 1;

- const int32_t remainder = val & mask;

-

- result = val >> exponent;

- int32_t threshold = (mask >> 1) + (result < 0);

-

- if (remainder > threshold) {

- result += 1;

- }

- return result;

-}

-

-__NN_FORCE_INLINE__ int32_t esp_nn_multiply_by_quantized_mult(int32_t x, int32_t mult, int32_t shift)

-{

- int32_t left_shift = shift > 0 ? shift : 0;

- int32_t right_shift = shift > 0 ? 0 : -shift;

- int32_t result = esp_nn_sat_round_doubling_high_mul(x * (1 << left_shift), mult);

- return esp_nn_div_by_power_of_two(result, right_shift);

-}

-

-__NN_FORCE_INLINE__ int32_t esp_nn_multiply_by_quantized_mult_fast(int32_t x, int32_t mult, int32_t shift)

-{

- int32_t left_shift = max(shift, 0);

- int32_t right_shift = left_shift - shift;

-

- int64_t nudge_val = 1 << 30;

- int64_t in0_64 = (int64_t) (x << left_shift);

-

- /* Multiply and add nudge */

- int64_t mult_64 = in0_64 * mult + nudge_val;

- int32_t result = (int32_t) (mult_64 >> 31);

- if (right_shift) {

- result = esp_nn_div_by_power_of_two_fast(result, right_shift);

- }

- return result;

-}

-

-static void esp_nn_aligned_s8_pad_with_value(const int8_t *src, int8_t *dst,

- const uint16_t input_wd,

- const uint16_t input_ht,

- const uint16_t channels,

- const int32_t pad_val,

- const uint16_t pad_wd,

- const uint16_t pad_ht)

-{

- /* memset with pad_val */

- memset(dst, pad_val, ((input_wd + 2 * pad_wd) * (input_ht + 2 * pad_ht)) * channels);

- dst += (pad_wd + input_wd + pad_wd) * channels;

-

- for (int i = 0; i < input_ht; i++) {

- dst += pad_wd * channels;

- for (int j = 0; j < input_wd * channels; j++) {

- *dst++ = *src++;

- }

- dst += pad_wd * channels;

- }

-}

-

-static void esp_nn_aligned_s8_pad_end_with_value(const int8_t *src, int8_t *dst,

- const uint16_t input_wd,

- const uint16_t input_ht,

- const uint16_t channels,

- const int32_t pad_val,

- const uint16_t pad_wd,

- const uint16_t pad_ht)

-{

- for (int i = 0; i < input_ht; i++) {

- for (int j = 0; j < input_wd * channels; j++) {

- *dst++ = *src++;

- }

- if (pad_wd) {

- memset(dst, pad_val, pad_wd * channels);

- dst += pad_wd * channels;

- }

- }

- /* pad end `pad_ht` lines at end */

- if (pad_ht) {

- memset(dst, pad_val, (input_wd + pad_wd) * pad_ht * channels);

- }

-}

-

-/**

- * @brief convert 8 bit input data to 16 bit

- *

- * @param src int8_t source data

- * @param dst int16_t dst data

- * @param size length of data

- * @param offset offset to be added to src data. Range: [-128, 127]

- */

-__NN_FORCE_INLINE__ void esp_nn_s8_to_s16_with_offset(const int8_t *src, int16_t *dst,

- const int size, const int32_t offset)

-{

- int i = 0;

- for (; i < size; i += 2) {

- dst[i + 0] = src[i + 0] + offset;

- dst[i + 1] = src[i + 1] + offset;

- }

- if(i < size) {

- dst[i] = src[i] + offset;

- }

-}

-

-/**

- * @brief convert 8 bit input data to 16 bit

- *

- * @param src int8_t source data

- * @param dst int16_t dst data

- * @param size length of data

- */

-__NN_FORCE_INLINE__ void esp_nn_s8_to_s16(const int8_t *src, int16_t *dst, const int size)

-{

- int i = 0;

- for (; i < size; i += 2) {

- dst[i + 0] = src[i + 0];

- dst[i + 1] = src[i + 1];

- }

- if(i < size) {

- dst[i] = src[i];

- }

-}

diff --git a/code/components/esp-nn/src/convolution/esp_nn_conv_ansi.c b/code/components/esp-nn/src/convolution/esp_nn_conv_ansi.c

deleted file mode 100644

index 677c0ad8..00000000

--- a/code/components/esp-nn/src/convolution/esp_nn_conv_ansi.c

+++ /dev/null

@@ -1,179 +0,0 @@

-// Copyright 2020-2021 Espressif Systems (Shanghai) PTE LTD

-//

-// Licensed under the Apache License, Version 2.0 (the "License");

-// you may not use this file except in compliance with the License.

-// You may obtain a copy of the License at

-//

-// http://www.apache.org/licenses/LICENSE-2.0

-//

-// Unless required by applicable law or agreed to in writing, software

-// distributed under the License is distributed on an "AS IS" BASIS,

-// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-// See the License for the specific language governing permissions and

-// limitations under the License.

-

-#include

-

-#include

-

-int esp_nn_get_conv_scratch_size_ansi(const data_dims_t *input_dims,

- const data_dims_t *filter_dims,

- const data_dims_t *output_dims,

- const conv_params_t *conv_params)

-{

- return 0;

-}

-

-void esp_nn_set_conv_scratch_buf_ansi(const void *buf)

-{

-

-}

-

-/**

- * Assumption 1: i/p channels == o/p channels

- * Assumption 2: Pointers are valid

- * Assumption 3: dialation width = 1

- */

-void esp_nn_conv_u8_ansi(const uint8_t *input_data,

- const uint16_t input_wd,

- const uint16_t input_ht,

- const uint16_t in_channels,

- const int32_t input_offset,

- const uint16_t pad_wd,

- const uint16_t pad_ht,

- const uint16_t stride_wd,

- const uint16_t stride_ht,

- const uint8_t *filter_data,

- const uint16_t filter_wd,

- const uint16_t filter_ht,

- const int32_t filter_offset,

- const int32_t *bias,

- uint8_t *out_data,

- const uint16_t out_wd,

- const uint16_t out_ht,

- const uint16_t out_channels,

- const int32_t out_offset,

- const int32_t out_shift,

- const int32_t out_mult,

- const int32_t activation_min,

- const int32_t activation_max)

-{

- for (int out_y = 0; out_y < out_ht; out_y++) { //height loop

- const int16_t base_y = (out_y * stride_ht) - pad_ht;

- for (int out_x = 0; out_x < out_wd; out_x++) { //width_loop

- const int16_t base_x = (out_x * stride_wd) - pad_wd;

- for (int out_ch_idx = 0; out_ch_idx < out_channels; out_ch_idx++) {//channel_loop

- int32_t result = 0;

-

- /* Select filter so as the point doesn't lie outside block */

- int filter_y_start = max(0, -base_y);

- int filter_x_start = max(0, -base_x);

- int filter_y_end = min(filter_ht, input_ht - base_y);

- int filter_x_end = min(filter_wd, input_wd - base_x);

-

- for (int filter_y_idx = filter_y_start; filter_y_idx < filter_y_end; filter_y_idx++) {

- const int32_t idx_y = base_y + filter_y_idx;

- for (int filter_x_idx = filter_x_start; filter_x_idx < filter_x_end; filter_x_idx++) {

- const int32_t idx_x = base_x + filter_x_idx;

- for (int in_ch_idx = 0; in_ch_idx < in_channels; in_ch_idx++) {

- int32_t input_index = (idx_y * input_wd + idx_x) * in_channels + in_ch_idx;

- int32_t filter_index = ((out_ch_idx * filter_ht + filter_y_idx)

- * filter_wd + filter_x_idx) * in_channels

- + in_ch_idx;

- int32_t input_val = input_data[input_index] + input_offset;

- int32_t filter_val = filter_data[filter_index] + filter_offset;

- result += input_val * filter_val;

- }

- }

- }

- if (bias) {

- result += bias[out_ch_idx];

- }

- result = esp_nn_multiply_by_quantized_mult(result, out_mult, out_shift);

- result += out_offset;

- result = max(result, activation_min);

- result = min(result, activation_max);

-

- int out_index = (out_y * out_wd + out_x) * out_channels + out_ch_idx;

- out_data[out_index] = (uint8_t) result;

- }

- }

- }

-}

-

-/**

- * Assumption 1: i/p channels == o/p channels

- * Assumption 2: Pointers are valid

- * Assumption 3: dialation width = 1

- */

-void esp_nn_conv_s8_ansi(const data_dims_t *input_dims,

- const int8_t *input_data,

- const data_dims_t *filter_dims,

- const int8_t *filter_data,

- const int32_t *bias,

- const data_dims_t *output_dims,

- int8_t *out_data,

- const conv_params_t *conv_params,

- const quant_data_t *quant_data)

-{

- const uint16_t input_wd = input_dims->width;

- const uint16_t input_ht = input_dims->height;

- const uint16_t in_channels = input_dims->channels;

- const int32_t input_offset = conv_params->in_offset;

- const int32_t out_offset = conv_params->out_offset;

- const uint16_t pad_wd = conv_params->padding.width;

- const uint16_t pad_ht = conv_params->padding.height;